Interval-Arithmetic Vector Quantization for Image Compression

Objective

By integrating Convolutional Neural Networks (CNNs) and Interval-Arithmetic Vector Quantization (IAVQ), this study attempts to create an advanced image reduction method. The goals include utilizing interval arithmetic to improve quantization intervals, implementing interval arithmetic-based quantization for reduced artefacts, implementing CNNs to preserve attributes like contrast and luminance, and quantitatively assessing compression quality using metrics like PSNR and SSIM. Comparative analysis will illustrate why alternative approaches are better. The study also examines the practical relevance of attribute preservation and validates it. The goal of this research is to improve compression efficiency while retaining perceptual quality, which will have an influence on a variety of applications that depend on effective data processing and transmission.

Abstract

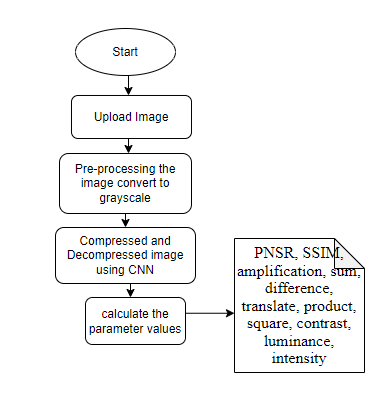

The effective storage and transmission of data in many applications depends on image compression. We brought forward a unique method dubbed "Interval-Arithmetic Vector Quantization for Image Compression Using CNN" that combines interval arithmetic with convolutional neural networks (CNNs) to improve picture compression performance. Traditional vector quantization methods suffer from information loss due to quantization errors, leading to reduced image quality. To address this, our approach leverages interval arithmetic to represent quantization intervals as ranges, mitigating the effects of quantization errors and enabling a more accurate reconstruction of compressed images. By incorporating CNNs, which excel at capturing spatial dependencies within images, we harness their feature extraction capabilities to guide the quantization process effectively. The proposed framework involves two main stages: training and compression. During the training stage, a CNN is trained to learn feature representations that encapsulate important image characteristics such as contrast, luminance, and intensity. Subsequently, interval-arithmetic-based quantization is applied to these features, constructing quantization intervals that accommodate variations due to quantization errors. In the compression stage, the trained CNN is employed to extract features from input images, and the interval-arithmetic-based quantization maps these features to the predefined quantization intervals, considering attributes like sum, difference, and product. This process significantly reduces the effects of quantization errors on the reconstructed images, resulting in higher compression quality as measured by PSNR (Peak Signal-to-Noise Ratio) and SSIM (Structural Similarity Index). Experimental evaluations conducted on benchmark image datasets demonstrate the superiority of our Interval-Arithmetic Vector Quantization approach compared to traditional vector quantization methods. The proposed method achieves better compression ratios while preserving image quality and reducing artifacts caused by quantization. The incorporation of interval arithmetic enriches the compression process with amplification and translation capabilities, leading to improved image fidelity. This research contributes to the field of image compression by introducing a novel technique that leverages interval arithmetic and CNNs, showcasing the potential to enhance image compression efficiency and quality simultaneously, as validated by the quantitative metrics of PSNR and SSIM.

Keywords: CNN, Image compression, Vector quantization

NOTE: Without the concern of our team, please don't submit to the college. This Abstract varies based on student requirements.

Block Diagram

Specifications

H/W CONFIGURATION:

Processor - I3/Intel Processor

Hard Disk - 160GB

Key Board - Standard Windows Keyboard

Mouse - Two or Three Button Mouse

Monitor - SVGA

RAM - 8GB

S/W CONFIGURATION:

• Operating System : Windows 7/8/10

• Server side Script : HTML, CSS, Bootstrap & JS

• Programming Language : Python

• Libraries : Flask, Pandas, Mysql.connector, Os, Smtplib, Numpy

• IDE/Workbench : PyCharm

• Technology : Python 3.6+

• Server Deployment : Xampp Server

Paper Publishing

Paper Publishing