Feature Selection and Ensemble Learning Techniques in One-Class Classifiers: An Empirical Study of Two-Class Imbalanced Datasets on Vehicle Insurance Data

Objective

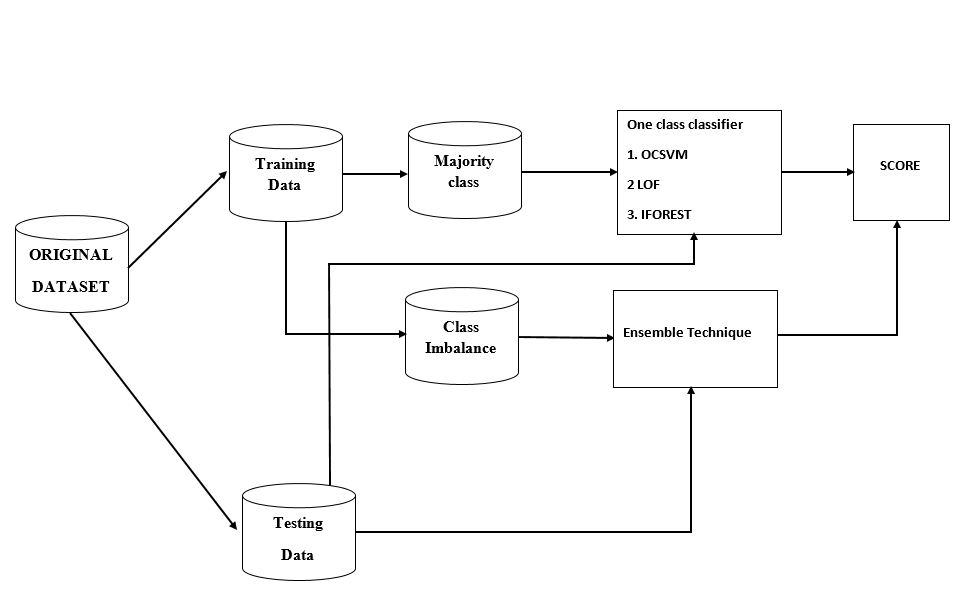

The research objectives of this project is to understand the performance of OCC classifiers and examine the level of performance improvement when feature selection is considered for pre-processing the training data in the majority class and ensemble learning is employed.

Abstract

Class imbalance learning is an important research problem in data mining and machine learning. Most solutions including data levels, algorithm levels, and cost sensitive approaches are derived using multi-class classifiers, depending on the number of classes to be classified. One-class classification (OCC) techniques, in contrast, have been widely used for anomaly or outlier detection where only normal or positive class training data are available. In this study, we treat every two-class imbalanced dataset as an anomaly detection problem, which contains a larger number of data in the majority class, i.e. normal or positive class, and a very small number of data in the minority class. The research objectives of this paper are to understand the performance of OCC classifiers and examine the level of performance improvement when feature selection is considered for pre-processing the training data in the majority class and ensemble learning is employed. In most cases, though, performing feature selection does not improve the performance of the OCC classifiers in most. So we opted for the ensemble learning techniques when feature selection is considered which results better accuracy compared to the OCC classifiers.

NOTE: Without the concern of our team, please don't submit to the college. This Abstract varies based on student requirements.

Block Diagram

Specifications

HARDWARE SPECIFICATIONS:

RAM: 8GB (min)

Processor: Intel -Preferable.

Hard Disk: 128 GB

SOFTWARE SPECIFICATIONS:

Operating System: Windows 7+

Server-side Script: Python 3.6+

IDE: Jupyter Notebook or Colab.

Libraries Used: Pandas, Numpy, Matplotlib, OS, TensorFlow.

Learning Outcomes

- About Python.

- About PyCharm.

- About Pandas.

- About Numpy.

- About HTML.

- About CSS.

- About JavaScript.

- About Database.

- About Machine Learning.

- About Artificial Intelligent.

- About how to use the libraries.

- Project Development Skills:

- Problem analyzing skills.

- Problem solving skills.

- Creativity and imaginary skills.

- Programming skills.

- Deployment.

- Testing skills.

- Debugging skills.

- Project presentation skills.

- Thesis writing skills.

Paper Publishing

Paper Publishing