Identification of acute illness and facial cues of illness

Objective

The main objective of the project is to detect who has affected for facial cues and who are not affected for facial cues by which user can select the model to classify images with best Accuracy.

Abstract

Facial and bodily cues (clinical gestalt) in Deep learning (DL) models improve the assessment of a patient's health status, as shown in genetic syndromes and acute coronary syndrome. It is unknown if the inclusion of clinical gestalt improves the classification of acutely ill patients. As in previous research in DL analysis of medical images, simulated or augmented data may be used to assess the usability of clinical gestalt. In this study, we developed a computer-aided diagnosis system for automatic Rug sick detection using Facial Cue of illness images. Acutely sick people were rated by naive observers as having paler lips and skin, a more swollen face, droopier corners of the mouth, more hanging eyelids, redder eyes, and less glossy and patchy skin, as well as appearing more tired. Our findings suggest that facial cues associated with the skin, mouth, and eyes can aid in the detection of acutely sick and potentially contagious people. We employed deep transfer learning to handle the scarcity of available data and designed a Convolutional Neural Network (CNN) model along with the four transfer learning methods: VGG16, VGG19, InceptionV3, Xception, and ResNet50. Where in the existing methods ResNet101 is used that which did not get the proper accuracy and that tends to be improved. Hence the present method with other transfer learning methods is proposed. The proposed approach was evaluated on a publicly available Facial Cue of illness dataset.

Keywords: Rug healthy, Rug sick, Facial Cue of illness images. Deep Learning, CNN, VGG16, VGG19, InceptionV3, Xception, and ResNet50.

NOTE: Without the concern of our team, please don't submit to the college. This Abstract varies based on student requirements.

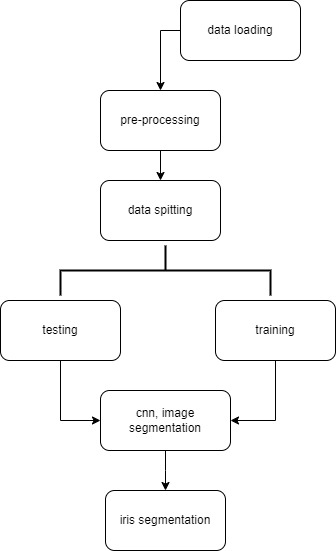

Block Diagram

Specifications

SYSTEM SPECIFICATIONS:

H/W Specifications:

- Processor: I5/Intel Processor

- RAM: 8GB (min)

- Hard Disk: 128 GB

S/W Specifications:

- Operating System: Windows 10

- Server-side Script: Python 3.6

- IDE : PyCharm,Google colab

- Libraries Used: Numpy, IO, OS, Flask, Keras, pandas, TensorFlow

Learning Outcomes

- Practical exposure to

- Hardware and software tools

- Solution providing for real-time problems

- Working with team/individual

- Work on creative ideas

- Testing techniques

- Error correction mechanisms

- What type of technology versions is used?

- Working of Tensor Flow

- Implementation of Deep Learning techniques

- Working of CNN algorithm

- Working of Transfer Learning methods

- Building of model creations

- Scope of project

- Applications of the project

- About Python language

- About Deep Learning Frameworks

- Use of Data Science

Paper Publishing

Paper Publishing