Emotion Recognition using Speech Processing

Objective

In this project, we collect the different methodologies that which process and classify speech signals and detect the emotions from the speech signals as emotions play vital role in communications. Here, the emotions are detected from a person or through a speaker.

Abstract

In human machine interface application, emotion recognition from the speech signal has been research topic since many years. Emotions play an extremely important role in human mental life. It is a medium of expression of one's perspective or one's mental state to others. Speech Emotion Recognition (SER) can be defined as extraction of the emotional state of the speaker from his or her speech signal.

There are few universal emotions- including Neutral, Anger, Happiness, Sadness etc in which any intelligent system with finite computational resources can be trained to identify or synthesize as required. In this work, we are extracting Mel-frequency cepstral coefficients (MFCC), Chromogram, Mel scaled spectrogram in conjunction with Spectral contrast and Tonal Centroid features. Deep Neural Network is used to classify the emotion in this work.

Keywords: Emotions, Mel-Frequency Cepstral Coefficients, Chromogram, Mel Scaled Spectrogram, Spectral Contrast, Tonal Centroid, Deep Neural Network.

NOTE: Without the concern of our team, please don't submit to the college. This Abstract varies based on student requirements.

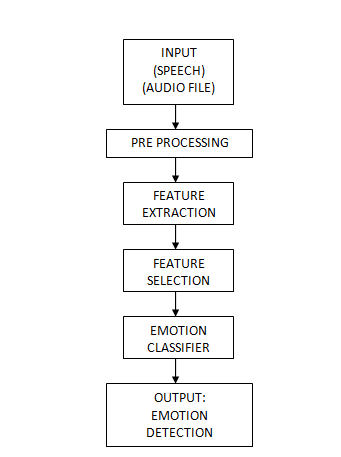

Block Diagram

Specifications

HARDWARE SPECIFICATIONS:

- Processor: I3/Intel

- Processor RAM: 4GB (min)

- Hard Disk: 128 GB

- Key Board: Standard Windows Keyboard

- Mouse: Two or Three Button Mouse

- Monitor: Any

SOFTWARE SPECIFICATIONS:

- Operating System: Windows 7+

- Server-side Script: Python 3.6+

- IDE: PyCharm

- Libraries Used: Pandas, Numpy, sklearn, Flask, TensorFlow.

Learning Outcomes

- Importance of classification.

- Scope of speech emotion recognition.

- Importance of MFCC, Chromogram, Mel scaled spectogram.

- Using Tonal Centroid and Spectral contrast features.

- Importance of PyCharm IDE.

- Working of DNN.

- Uses of Flask.

- Connection to MySQL database.

- Process of debugging a code.

- Input and Output modules

- How test the project based on user inputs and observe the output

- Project Development Skills:

- Problem analyzing skills.

- Problem solving skills.

- Creativity and imaginary skills.

- Programming skills.

- Deployment.

- Testing skills.

- Debugging skills.

- Project presentation skills.

- Thesis writing skills.

Paper Publishing

Paper Publishing