Construction of Machine-Labeled Data for Improving Named Entity Recognition by Transfer Learning

Objective

In this paper, we propose a method for automatically generating training data and effectively using the generated data to reduce the labeling cost. The effect of our proposed method was verified with two versions of DNN-based named entity recognition (NER) models: bidirectional LSTM-CRF and vanilla BERT. Where the proposed NER systems outperform the baseline systems in both languages without the need for additional manual labeling.

Abstract

Deep neural networks (DNNs) require a large amount of manually labeled training data to make significant achievements. However, manual labeling is laborious and costly. In this study, we propose a method for automatically generating training data and effectively using the generated data to reduce the labeling cost. The generated data (called ‘‘machine-labeled data’’) is generated using a bagging-based bootstrapping approach. However, using the machine-labeled data does not guarantee high performance because of errors in the automatic labeling. In order to reduce the impact of mislabeling, we applied a transfer learning approach. The effect of our proposed method was verified with two versions of DNN-based named entity recognition (NER) models: bidirectional LSTM-CRF and vanilla BERT. We conducted NER tasks in two languages (English and Korean). The proposed method results in average F1 scores of 78.87% (3.9% point improvement) with bidirectional LSTM-CRF and 82.08% (1% point improvement) with BERT on three Korean NER datasets. In English, the performance increased by an average of 0.45% points with the two DNN-based models.

Keywords: Named Entity Recognition, Bootstrapping, Bagging, Transfer Learning, Deep Learning.

NOTE: Without the concern of our team, please don't submit to the college. This Abstract varies based on student requirements.

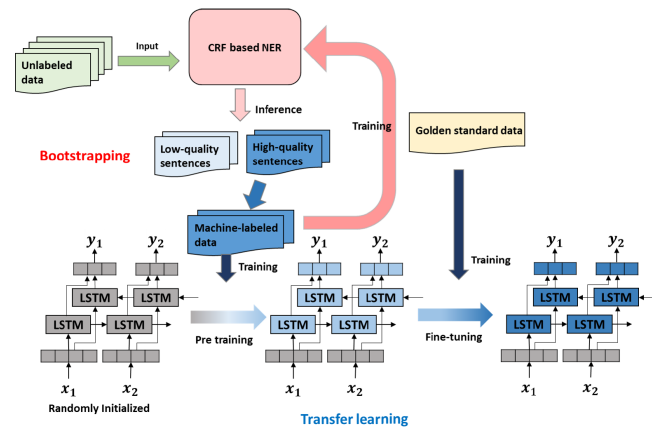

Block Diagram

Specifications

HARDWARE SPECIFICATIONS:

- Processor- I3/Intel Processor

- RAM- 4GB (min)

- Hard Disk- 128 GB

- Key Board-Standard Window

- Keyboard. Mouse-Two or Three Button Mouse.

- Monitor-Any.

SOFTWARE SPECIFICATIONS:

- Operating System: Windows 7+

- Technology: Python 3.6+

- IDE: PyCharm IDE

- Libraries Used: Pandas, NumPy, OpenCV, TensorFlow, Matplotlib.

Learning Outcomes

- Named entity recognition.

- Bootstrapping.

- Bagging.

- Transfer learning.

- Deep learning.

- Importance of PyCharm IDE.

- How ensemble models works.

- Process of debugging a code.

- The problem with imbalanced dataset.

- Benefits of SMOTE technique.

- Input and Output modules.

- How test the project based on user inputs and observe the output.

- Project Development Skills:

- Problem analyzing skills.

- Problem solving skills.

- Creativity and imaginary skills.

- Programming skills.

- Deployment.

- Testing skills.

- Debugging skills.

- Project presentation skills.

- Thesis writing skills.

Paper Publishing

Paper Publishing